Political theories and AI

Through a few new emerging projects and opportunities, I’ve had reason to circle back to the topic of Artificial Intelligence and ethics. I wanted to jot down a few notes as some recent reading and conversations have been clarifying some ideas here.

In my work with Jake Goldenfein on this topic (published 2021), we framed the ethical problem of AI in terms of its challenge to liberalism, which we characterize in terms of individual rights (namely, property and privacy rights), a theory of why the free public market makes the guarantees of these rights sufficient for many social goods, and a more recent progressive or egalitarian tendency. We then discuss how AI technologies challenge liberalism and require us to think about post-liberal configurations of society and computation.

A natural reaction to this paper, especially given the political climate in the United States, is “aren’t the alternatives to liberalism even worse?” and it’s true that we do not in that paper outline an alternative to liberalism which a world with AI might aspire to.

John Mearsheimer’s The Great Delusion: Liberal Dreams and International Realities (2018) is a clearly written treatise on political theory. Mearsheimer rose to infamy in 2022 after the Russian invasion of Ukraine because of widely circulated videos of a lecture in 2015 in which he argued that the fault for Russia’s invasion of Crimea in 2014 was due to U.S. foreign policy. It is because of that infamy that I’ve decided to read The Great Delusion, which was a Financial Times Best Book of 2018. The Financial Times editorials have since turned on Mearsheimer. We’ll see what they say about him in another four years. However politically unpopular he may be, I found his points interesting and have decided to look at his more scholarly work. I have not been disappointed, and find that he clearly articulates political philosophy I will use these articulations. I won’t analyze his international relations theory here.

Putting Mearsheimer’s international relations theories entirely aside for now, I’ve been pleased to find The Great Delusion to be a thorough treatise on political theory, and it goes to lengths in Chapter 3 to describe liberalism as a political theory (which will be its target). Mearsheimer distinguished between four different political ideologies, citing many of their key intellectual proponents.

- Modus vivendi liberalism. (Locke, Smith, Hayek) A theory committed to individual negative rights, such as private property and privacy, against the impositions by the state. The state should be minimal, a “night watchman”. This can involve skepticism about the ability of reason to achieve consensus about the nature of the good life; political toleration of differences is implied by the guarantee of negative rights.

- Progressive liberalism. (Rawls) A theory committed to individual rights, including both negative rights and positive rights, which can be in tension. An example positive right is equal opportunity, which requires interventions by the state in order to guarantee. So the state must play a stronger role. Progressive liberalism involves more faith in reason to achieve consensus about the good life, as progressivism is a positive moral view imposed on others.

- Utilitarianism. (Bentham, Mill) A theory committed to the greatest happiness for the greatest number. Not committed to individual rights, and therefore not a liberalism per se. Utilitarian analysis can argue for tradeoffs of rights to achieve greater happiness, and is collectivist, not in individualist, in the sense that it is concerned with utility in aggregate.

- Liberal idealism. (Hobson, Dewey) A theory committed to the realization of an ideal society as an organic unity of functioning subsystem. Not committed to individual rights primarily, so not a liberalism, though individual rights can be justified on ideal grounds. Influenced by Hegelian views about the unity of the state. Sometimes connected to a positive view of nationalism.

This is a highly useful breakdown of ideas, which we can bring back to discussions of AI ethics.

Jake Goldenfein and I wrote about ‘liberalism’ in a way that, I’m glad to say, is consistent with Mearsheimer. We too identity right- and left- wing strands of liberalism. I believe our argument about AI’s challenge to liberal assumptions still holds water.

Utilitarianism is the foundation of one of the most prominent versions of AI ethics today: Effective Altruism. Much has been written about Effective Altruism and its relationship to AI Safety research. I have expressed some thoughts. Suffice it to say here that there is a utilitarian argument that ‘ethics’ should be about prioritizing the prevention of existential risk to humanity, because existential catastrophe would prevent the high-utility outcome of humanity-as-joyous-galaxy-colonizers. AI is seen, for various reasons, to be a potential source of catastrophic risk, and so AI ethics is about preventing these outcomes. Not everybody agrees with this view.

For now, it’s worth mentioning that there is a connection between liberalism and utilitarianism through theories of economics. While some liberals are committed to individual rights for their own sake, or because of negative views about the possibility of rational agreement about more positive political claims, others have argued that negative rights and lack of government intervention lead to better collective outcomes. Neoclassical economics has produced theories and ‘proofs’ to this effect, which rely on mathematical utility theory, which is a successor to philosophical utilitarianism in some respects.

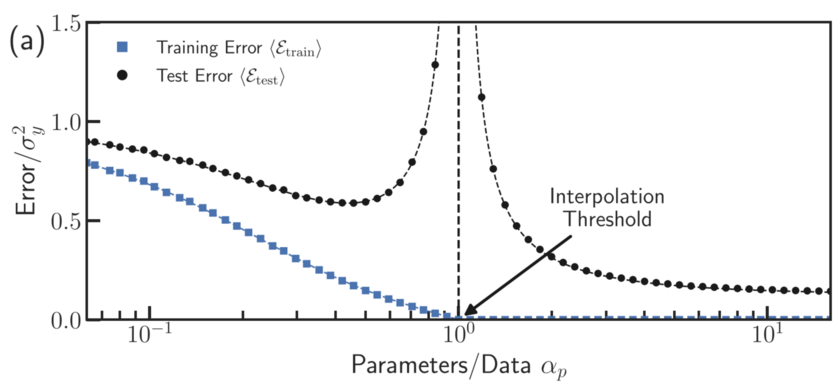

It is also the case that a great deal of AI technology and technical practice is oriented around the vaguely utilitarian goals of ‘utility maximization’, though this is more about the mathematical operationalization of instrumental reason and less about a social commitment to utility as a political goal. AI practice and neoclassical economics are quite aligned in this way. If I were to put the point precisely, I’d say that the reality of AI, by exposing bounded rationality and its role in society, shows that arguments that negative rights are sufficient for utility-maximizing outcomes are naive, and so are a disappointment for liberals.

I was pleased that Mearsheimer brought up what he calls ‘liberal idealism’ in his book, despite it being perhaps a digression from his broader points. I have wondered how to place my own work, which draws heavily on Helen Nissenbaum’s theory of Contextual Integrity (CI), which is heavily influenced by the work of Michael Walzer. CI is based on a view of a society composed of separable spheres, which distinct functions and internally meaningful social goods, which should not be directly exchanged or compared. Walzer has been called a communitarian. I suggest that CI might be best seen as a variation of liberal idealism, in that it orients ethics towards a view of society as an idealized organic unity.

If the present reality of AI is so disappointing, then we must try to imagine a better ideal, and work our way towards it. I’ve found myself reading more and more work, such as by Felix Adler and Alain Badiou, that advocate for the need for an ideal model of society. What we currently are missing is a good computational model of such a society which could do for idealism what neoclassical economics did for liberalism. Which is, namely, to create a blueprint for a policy and science of its realization. If we were to apply AI to the problem of ethics, it would be good to use it this way.